In October of 2019, three months into my tenure at Contrast Security, I received a challenge question from a customer prospect in the northern Atlanta suburbs who was using a competitor’s legacy static application security testing (SAST) solution. In a nutshell, he posed the following: “Static analysis (SAST) guarantees me 100% code coverage. Can your product guarantee me the same?”

Claim: SAST and 100% Code Coverage

The question was rooted in the fact that Contrast is an instrumentation-based technology that is embedded within the application itself—rather than a traditional application security tool that scans lines of code looking for known threats. This prospect believed that legacy SAST guaranteed that his company’s code was completely tested and protected—satisfying peace-of-mind and risk-aversion motivators that are key to any security organization.

A week or two later, I found myself in Orlando at a conference sharing a ride to the airport with Contrast’s CTO and co-founder Jeff Williams. I posed the same question to Jeff to hear his thoughts. His response was the usual jovial and enthusiastic, “Well, no, we can’t [offer 100% code coverage], but neither can SAST!” As he elaborated, I realized that I already knew the answer from my days selling SAST at Coverity (now Synopsis) and Klocwork (now Rogue Wave).

SAST, of course, is a compile-time technology, but the fact that it compiles every line of code does not mean that 1) it analyzes every code path and 2) does so for every possible value. In fact, it doesn’t—and far from it.

Before I proceed, a brief disclaimer: The SAST landscape has changed quite a bit over the past decade. While I’m going to explain this in decade-old terms, the underlying principles remain the same.

A Quick SAST History Lesson

Circa 2010, the biggest players in what was then called static analysis for code quality were Coverity, Klocwork, Fortify (the lone major player on “the security side”), and a couple of others, including Perforce that was known as the “academic” of the group. Perforce’s analysis engine was believed to perform the deepest and most comprehensive analysis. But where—very broadly speaking—“typical” legacy SAST analysis, with all checkers turned on, doubled an application’s build time (between one and eight hours for a sufficiently large application with limited build parallelization or none at all), Perforce extended build time to literally weeks.

Even in a world that was still rife with waterfall approaches and where DevOps was still relatively nascent, companies could not afford this type of lag time between development iterations. Perforce, as a result, was only used by organizations where the cost of product failure was extremely high, such as embedded device manufacturers (e.g., NASA booster rocket failure resulting in $100 million of investment explosion), or smaller organizations with a zero sense of urgency (e.g., universities, research institutions, etc.).

For most companies, the slow performance of SAST wasn’t the only problem. Legacy SAST is also very computationally expensive. The leading vendors of the time quickly made optimizations and cut corners in their scan engines in order to 1) vastly decrease time to value, and 2) offer more value and capability than the pre-SAST status quo of five years prior.

The SAST False Negative

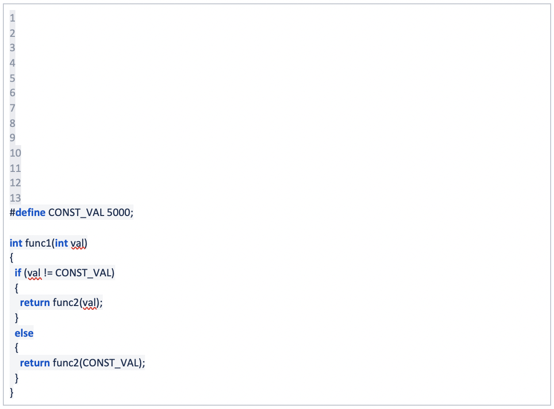

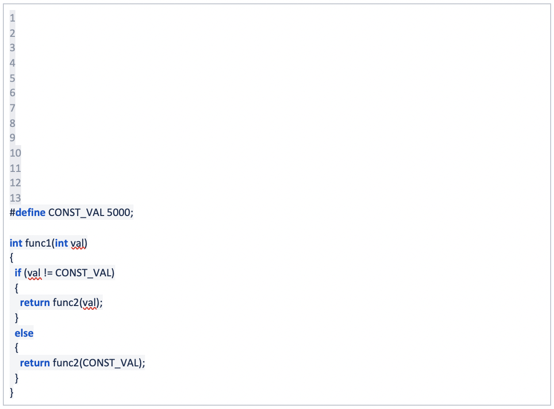

Beyond SAST being slow and expensive, an even more problematic issue is that it’s prone to inaccuracy in the form of false negatives. Its code checks are based on known threats—which means unknown and zero-day attacks can get past SAST controls. Consider the following C-style code:

“func1” receives an integer value. In order to analyze this with 100% assurance, a SAST engine must test this function with every possible integer value—all 263 - 1 of them given a 64-bit system. As a result of this, legacy SAST vendors made the decision to only test certain values. In this case, a SAST tool would test only the following:

- MAX_INT, MIN_INT

- -1, 0, 1

- Any constant integer values that were explicitly referenced in the code. In this case, 5000 might also be tested.

Of course, this code is extremely simple. In the real world, there are myriad subroutines called from a given routine. What happens if the error only occurs when the value 17 is passed into func1? A SAST solution will not identify this problem because it probably did not test for it. This is one of the primary reasons that SAST has so many false negatives. To overcome these issues and identify false negatives, a SAST vendor would need to universally increase analysis times—which would put them at an extreme competitive disadvantage.

The Legacy SAST False Positive

SAST’s accuracy problems don’t stop at false negatives. They are also prone to false positives—alerts to potential threats taking a shot in the dark at a vulnerability that may not exist in a specific application. To sort the actual threats from the false positives requires the time of a security analyst to research and remediate each individual alert.

Referring again to the above code block, legacy SAST typically does a good job of starting with func1 and then identifying all code paths in the resulting tree of called subroutines, although weaker scan engines have trouble understanding how conditional code paths in func1 affect conditional code paths in func2. However, SAST often struggles to: 1) identify a problem in func1, and 2) then understand if the problem would result in an actual vulnerability when the program is executed. When sitting with developers and identifying possible vulnerability issues in their code, I often receive the following response, “Yeah, but the function will never actually be called like that at runtime. That will never happen.”

This, in turn, leads to a mini-philosophical war, with rebuttals from me such as:

- “Are you sure?”

- “Can you guarantee that to your manager?”

- “What if your application changes over time in such a way that it does eventually happen?”

The bottom line is that many customers have not felt that SAST results were delivering direct and immediate value. These issues contribute to the overall noise level of SAST products and high volumes of false positives. The same solution that I proposed for false negatives applies here as well: A SAST vendor would need to universally increase analysis times, which would put them at an extreme competitive disadvantage.

Why Contrast Is Best for Application Security Coverage

This leads us to the essential problem with application security today. While developers know if what they test does (or does not) have vulnerabilities, they do not know if they’re testing everything that matters to a specific application.

As an instrumentation-based solution working from within the code itself, Contrast Security’s DevOps-Native AppSec Platform tests all the routes that a threat can take into the application. This provides Contrast with comprehensive visibility of the application runtime so that it protects against only the vulnerabilities that are actually present. If a threat targets a weakness that doesn’t appear in the specific application’s code, it can be ignored. Its real-time security capabilities deliver speed and accuracy (eliminating both false positives and false negatives) that SAST cannot.

In terms of coverage, Contrast is much better than what you would get from legacy SAST tools today. Contrast doesn’t require extensive testing; simple end-to-end tests of basic functionality are enough. The coverage from this for a typical development project is 60% to 70% route coverage, according to Contrast Labs research. But most importantly, Contrast offers a path to 100% coverage by highlighting exactly what routes haven’t been exercised. This allows development teams to add simple test cases to drive up both their functional test and security test coverage at the same time.

It also should be noted that Contrast’s coverage includes all parts of the application stack as deployed—including libraries, frameworks, server, and runtime. Our solution finds problems that are literally impossible for other tools to see. For example, at a major mutual fund company, Contrast discovered several “hidden routes” that were not visible to traditional application security solutions. These routes exposed critically dangerous administrative functions that would have allowed an attacker to compromise all the data in the application, including credentials, keys, personal data, and other secrets.

Security organizations will always be concerned about identifying and mitigating risks—and they often need to play it safe. Legacy SAST is still a politically safe choice in many organizations, even if it is outdated and technologically inferior. But the discussion above offers some clarification to the problems that customers already know that they have with SAST. And Contrast’s platform offers a superior alternative to SAST for comprehensively addressing their application security needs.

For an in-depth analysis of legacy SAST and vulnerability management, download our white paper—“How Manual Application Vulnerability Management Delays Innovation and Increases Business Risk.”