With server-side request forgery (SSRF) becoming a more important bug class in the era of microservices, I wanted to show why interactive application security testing (IAST) is the only tool for detecting SSRF accurately and why IAST results are more actionable.

I will skip the “what is SSRF” details. However, if you want a good primer, consider the write-up by the fine folks at Burp. Also, for those watching the OWASP Top Ten, you’ll see SSRF snagged the 10th spot in the draft release of the 2021 OWASP Top Ten.

There are two main types of application security testing (AST) tools used in today’s development environments that invite direct comparison to IAST.

Dynamic application security testing (DAST) can fuzz inputs and certainly send in SSRF attacks—but how can application security teams determine if those attacks worked? They can try to use file:// or non-HTTP URL protocols to detect a vulnerability and try to infer if any accessible sites would also be available. Alternatively, Qualys uses a third-party beacon site that abuses DNS to confirm with the DAST tool that the URL given to the victim was indeed fetched from the target application. This is really cool. However, the core criticisms of DAST don't come from how good it is at exploiting a known vulnerable URL. Specifically, DAST struggles to discover the URL, knowing how to format messages to it, and having the right state configured.

Unfortunately, in an increasingly application programming interface (API)-driven world, DAST tools are becoming harder to onboard and operationalize for continuous testing. Of course, we do believe that there is room for them in expert-led assessments—we use them ourselves at Contrast. But with DAST, we see many false negatives that occur due to the inability to properly navigate, maintain state, and communicate correctly with the site. In addition, most DAST tools don’t offer strong post-attack verification. On the positive side, DAST yields few false positives, which is nice. Anyway, back to SSRF.

Static application security testing (SAST) tools detect SSRF as a classic data-flow rule. If user input (or input otherwise determined to be “tainted”) is ever used as part of the construction of a URL, then SAST will discover it as an issue. Seems reasonable, right? This unfortunately leads to a lot of false positives. Because SAST doesn’t have any runtime data, it has no idea what part of the URL the taint ends up in. (Now that Contrast has its own pipeline-native static analysis scanning tool, you’ll see that we will continue to be realistic about both its strengths and shortcomings.) Said in another way, SAST will cite every time user input is used to build a URL—not every time user input can cause an actual SSRF vulnerability.

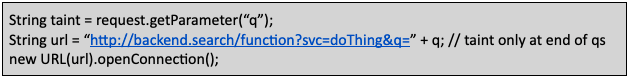

Here’s an example of code that is not vulnerable to SSRF but will be cited by a SAST tool:

Code sample that is not vulnerable to SSRF, but is cited by SAST

We don’t love the fact that an attacker may now control part of the query string. Bad actors can do things like cause parameter pollution, provide unexpected parameters, or tamper with the URL parser. But what is the concrete risk? There is none. Can you get hacked from this? Almost definitely not.

This simple source-to-sink pattern is also how Contrast’s original IAST SSRF rule worked in the past. But our customers challenged us and told us, “We’re getting a lot of unexploitable issues reported, so we’re marking them as false positives. Can you do better?”

After investigation, we determined that customers using our first-generation rule were right about the false positives. Most of the issues we reported were not exploitable—the URL taint couldn’t change the protocol, host, or path.

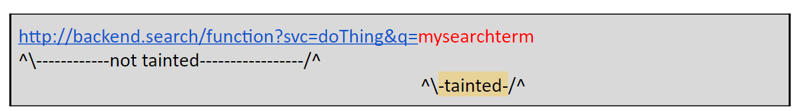

This is where the magic of having access to the runtime data really shines. Imagine that we observed the following code under test and saw a user submit “my search term” as the tainted value. The constructed URL would look like this:

Tainted URL, but not SSRF-vulnerable

With access to the runtime data, Contrast can safely parse the URL and see if the taint influences an important part of the URL (e.g., the protocol, host, or path). In our example, the taint only influences the query string, so it is not accurate to report it as an SSRF vulnerability.

Which parts of the URL warrant reporting is a regular discussion topic at Contrast. Once we corrected this issue in Contrast Assess, our false positives decreased dramatically, customer satisfaction improved, and we moved a step closer to the most accurate experience possible for application security testing.

I am proud of our team’s continued investment in rules that outperform the competition. If we can find a way to make our rules more accurate, we will—so please bring us your ideas for improvement! You can drop me a note on LinkedIn.

Recently, I’ve been getting increasingly fired up about infosec tools that flatten or obfuscate the meaning of “accuracy” as a critical term in our industry. Accuracy means something very specific, and it should be the North Star that every security company uses for product design.

But when some companies say “accuracy,” what they appear to really mean is “recall”—a measure of true positive discovery; in other words, quantitative alert results rather than qualitative alert results. This approach demonstrates a complete lack of concern about hiding a handful of true positives within a sea of false positives. Leaving customers to tune/triage/divine which alerts present real vulnerabilities is alarming. True positives get lost in the noise and left unfixed, while developers and security experts are ensnared in workflow bottlenecks that create a feedback loop of endless frustration. Scaling security often means customers end up turning a bunch of rules and capabilities off.

In the context of AST, “accuracy” means you get a better score for every one you get right, but you also get points taken away every time you’re wrong. By this measure, the accuracy of almost all traditional testing tools is abysmal. So please join me in correcting vendors on phone calls, panels, Zoom meetings, in the bar, and at live conferences when they talk about the “accuracy” of their tools.

Until we’re all pointed in the right direction, Contrast will proudly continue to be the only company focused on both aspects of accuracy.

Additional Resources

White Paper: The Truth About AppSec False Positives: Lack of Accuracy Is Burying Organizations With Erroneous Alerts

Inside AppSec Podcast: Integrated Security Instrumentation Is the Future of AppSec

Get the latest content from Contrast directly to your mailbox. By subscribing, you will stay up to date with all the latest and greatest from Contrast.