Many vulnerabilities, including XSS, SQL injection, command injection, LDAP injection, XML injection, and more happen when programmers send untrusted data to dangerous calls. Which seems easy to prevent... if you know what data is untrusted.

And that's the difficult part. When you're looking at a million lines of source code, laced with string operations, complex method calls, inheritance, aspect oriented programming, and reflection -- tracing the data flow can get extremely difficult.

So by the time the data gets to the dangerous call, it's impossible for the developer to know that it still hasn't been validated or encoded in a way that makes it safe. If only there were a way to confidently trace that data through all that code and let the developer know!

Static analysis tools attempt to do this by pseudo-executing the code, simulating all the instructions and calculating the outcome of each instruction. This works fairly well on simple 'toy' problems, but on real-world applications the results aren't acceptable.

You can check out the study done each year by the NSA's Center for Assured Software on static analysis tools. They created a test suite with over 25,000 test cases (called Juliet) and the static analysis tools correctly pass only a tiny fraction of them. And these test cases aren't nearly as complex as real code. The data flows in them are simple and relatively direct. In the real world, data flows can span dozens of steps through stack traces that are 50 frames deep or more.

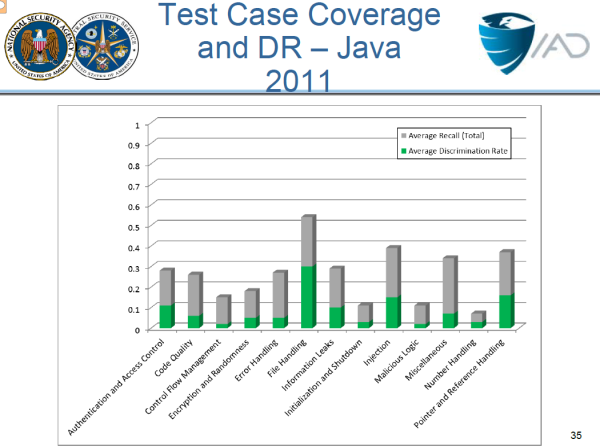

Here's a slide from the NSA's presentation on their study that shows the performance of all the top static analysis tools against the NSA test suite. It's not an encouraging chart, particularly when you realize that the grey part of each bar is the false alarms. The green part (mostly below 10%) is the actual good vulnerability identification rate.

There is another approach. In Contrast, we track actual data through the running application itself. This approach means that we never make a mistake about where untrusted data travels, so large numbers of false alarms are avoided. We correctly pass 73% of the entire NSA test suite, and 99% of the test cases that represent "web application" type flaws, such as those in the OWASP Top Ten.

There's another huge advantage to the Contrast approach. Static analysis tools have to identify every method that propagates untrusted data, or else their pseudo-execution can't correctly calculate the data flow. Because we track data through libraries, frameworks, and even the underlying runtime platform, there's no need to model each new framework and component that comes out. We simply track the actual values at runtime. It's simpler and more accurate, but more importantly, we can keep up to date with the libraries and frameworks you're using.

If you want accurate vulnerability findings, you need great data flow analysis.