By Jeff Williams, Co-Founder, Chief Technology Officer

April 18, 2014

In John Godfrey Saxe's retelling of The Blind Men and the Elephant, six blind men try to teach each other what an elephant is 'like'. They each take hold of a different part of the elephant and proclaim they know what an elephant is. In reality, they know only what they have experienced and, as a result, don't know what an elephant really looks like. Until all of the views are compiled together, they can't quite be certain. (You really should read the poem.) Application security is a lot like this.

We have many different ways to 'view' an application, and your choice can seriously bias your results.

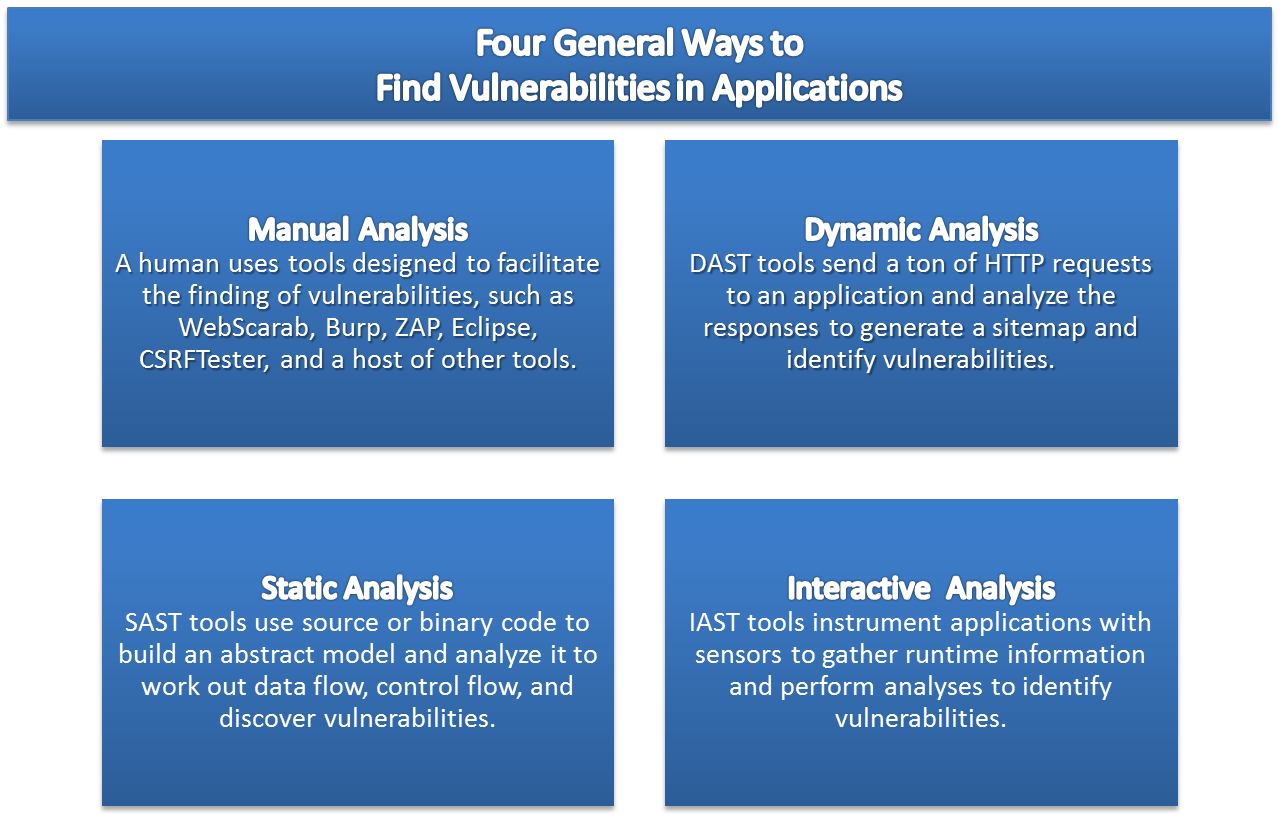

There are generally four ways to find vulnerabilities in applications, e.g. manual testing, dynamic analysis testing, static analysis testing, or interactive analysis testing, and each of them are useful in their own right. And when used together they can produce effective results. I've presented the four ways to find vulnerabilities in applications above. And, full disclosure, I've misled you. I've misled you because this whole approach to categorizing application security tools isn't helpful.

It's misleading because it focuses on *how* information is gathered, rather than *what* information is available to identify vulnerabilities. Who cares *how* the information was gathered? What matters is how complete, accurate, and useful the information is.

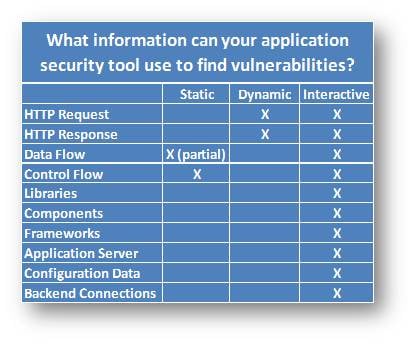

As it turns out, the evidence you need to accurately identify and describe a vulnerability is usually scattered in a number of places. The big difference between the various tools is not whether they are static, dynamic, or interactive. What's important is what information the tools have available to identify vulnerabilities. Let's take a look at these:

Again, the big difference between the various tools is not whether they are static, dynamic, or instrumentation-based. What's important is what information the tools have available to identify vulnerabilities.

Static analysis security testing tools only have information from the source code. From this they can sort of work out the control flow and data flow. But because they mostly don't look at libraries, components, and frameworks, they have only a limited view of the application. They also have no insight into HTTP requests and responses or backend connections. Given this, it's not surprising that they report over 80% false alarms.

Dynamic analysis security testing tools only have information from HTTP requests and responses. This means that everything they know about an application has to be gleaned from the HTTP responses. Some of these tools do amazing things given the lack of information. For example, they can sometimes identify blind SQL injection based purely on the timing of HTTP responses. But there are far simpler and more accurate ways to identify blind SQL injection.

Interactive analysis security testing tools, like Contrast™, actually includes some static, some dynamic, and some totally new types of analysis. Because the Contrast agent runs on the application server and its sensors gather information directly from the running application, it has access to the HTTP requests and responses, code, and libraries. Its data and control flow engines are based on the actual running application, not an abstract simulation. It also has direct access to configuration, library, framework, and backend connection information. The best part is that Contrast has all this information integrated together, in context.

For example, when we identify SQL injection, we know the exact HTTP request parameter it came from, the full data flow, the framework that parsed it from the form, the exact lines of code involved, the database library, the backend connection, the configuration data, and more. And all the information is integrated, in context, during the analysis -- not simply smushing together the results of two separately running tools. This not only allows extremely accurate vulnerability identification, but allows us to provide all the information that developers need in order to remediate problems quickly and effectively.

Why do we like the Contrast approach so much? Because we can use *ALL* this information, in real time, without any extra hardware or infrastructure, and without requiring a security expert to run the tool. There really is no comparison -- Contrast simply has too many advantages over existing approaches.

So maybe it's time to stop thinking of tools in terms of "static" or "dynamic." What really matters is what information the tools have access to. Which brings us back to the blind monks determining what an elephant looks like; because at the end of the day, static and dynamic tools do the best they can with the limited information they have, we just think it's time to take the blindfolds off.

.jpg)

Jeff brings more than 20 years of security leadership experience as co-founder and Chief Technology Officer of Contrast Security. He recently authored the DZone DevSecOps, IAST, and RASP refcards and speaks frequently at conferences including JavaOne (Java Rockstar), BlackHat, QCon, RSA, OWASP, Velocity, and PivotalOne. Jeff is also a founder and major contributor to OWASP, where he served as Global Chairman for 9 years, and created the OWASP Top 10, OWASP Enterprise Security API, OWASP Application Security Verification Standard, XSS Prevention Cheat Sheet, and many more popular open source projects. Jeff has a BA from Virginia, an MA from George Mason, and a JD from Georgetown.

Get the latest content from Contrast directly to your mailbox. By subscribing, you will stay up to date with all the latest and greatest from Contrast.